Automatic Algorithm Configuration for Optimization Solvers

One of the most exciting research areas in operations research is automatic algorithm configuration (AAC). It caught first the ML community's attention given its practical relevance in several tasks such as hyperparameters tuning.

In this article, I would like to highlight that there is room to push further the boundaries in this field when OR and ML communities join efforts. Indeed, the area is as relevant to the OR community as it is to the ML community.

Context

An algorithm can be defined as a sequence of instructions to be followed for solving a specific problem. It has a set of parameters that can take various values. Based on these values, the algorithm performance may vary significantly. A famous example is the hyperparameters of machine learning algorithms, which can substantially impact their performance. This led to the AAC research area, which has attracted significant attention over the last two decades.

Automatic Algorithm Configuration

AAC is concerned with the automated search for the most suitable parameter configuration(s) of a parametrized algorithm such as the hyperparameters of a deep learning network. Formally, given a very large (possibly infinite) instance space from which only a small subset of instances are known, the goal is to optimize the expected performance on the whole instance space. That is, the goal of the AAC process is to find configurations that perform well on instances that were not used in the AAC process itself while belonging to the same problem space.

Optimization Solvers

An optimization solver is a software package, including efficient implementations of optimization algorithms, specifically designed for the solution of optimization problems. We distinguish two types: Commercial solvers such as CPLEX and Gurobi, and Open Source solvers such as SCIP.

AAC State-of-the-art

The OR community also needs to tune several parameters used in metaheuristics, solvers, etc. For instance, the CPLEX solver has several parameters that can be tuned. Seeking to find the right configurations of parameters, many have initiated the development of AAC tuners. Within the literature, we refer to ParamILS, SMAC, and irace, which are highly cited and used by the OR community to tune solvers, metaheuristics, etc. It is worth mentioning that SMAC is also used by the ML community since it contains several facades.

Relevance

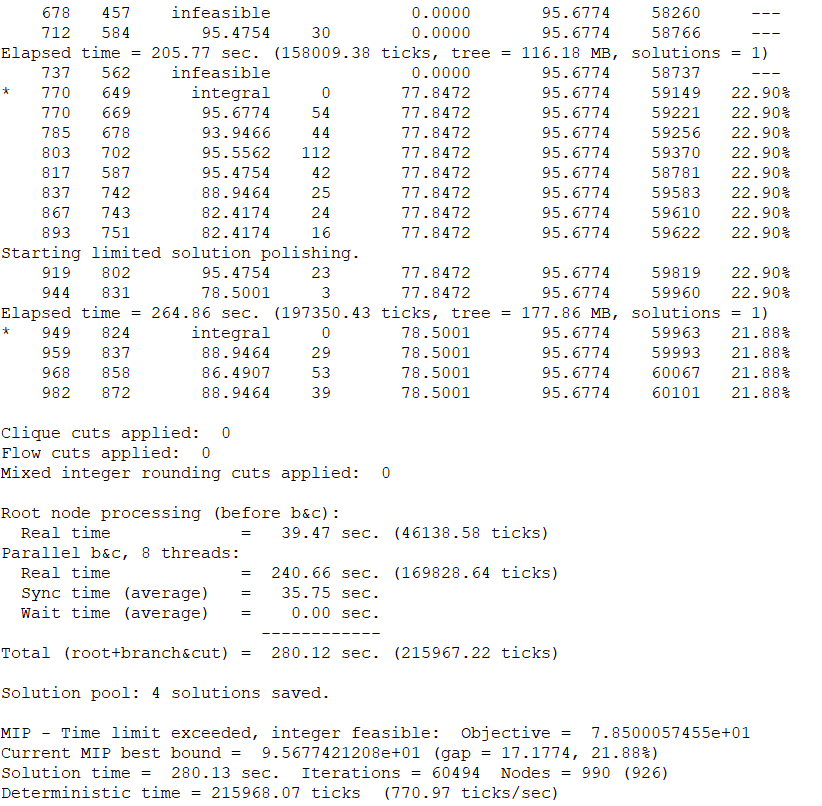

AAC is very relevant when dealing with complex optimization problems. These problems usually have difficult instances, which solvers struggle to solve in reasonable execution time. Thus, one may be tempted to tune the solver hoping to find a parameter configuration that accelerates convergence to (near-)optimal solutions.

Real Applications

We have had experiences using AAC to find good feasible solutions for complex supply chain optimization problems that include production scheduling, inventory management, and distribution. Using AAC, the solutions found can be used as a warm-start (starting point) for more sophisticated optimization methods such as Benders and Dantzig-Wolfe decompositions.

As witnessed again in this article, in research, simple ideas are usually the key or the starting point to tackling the most complex problems. This is a life principle that should be highlighted again and again in research contexts as well as others !

What do you think about the AAC research area? I am looking forward to hearing your ideas :-)